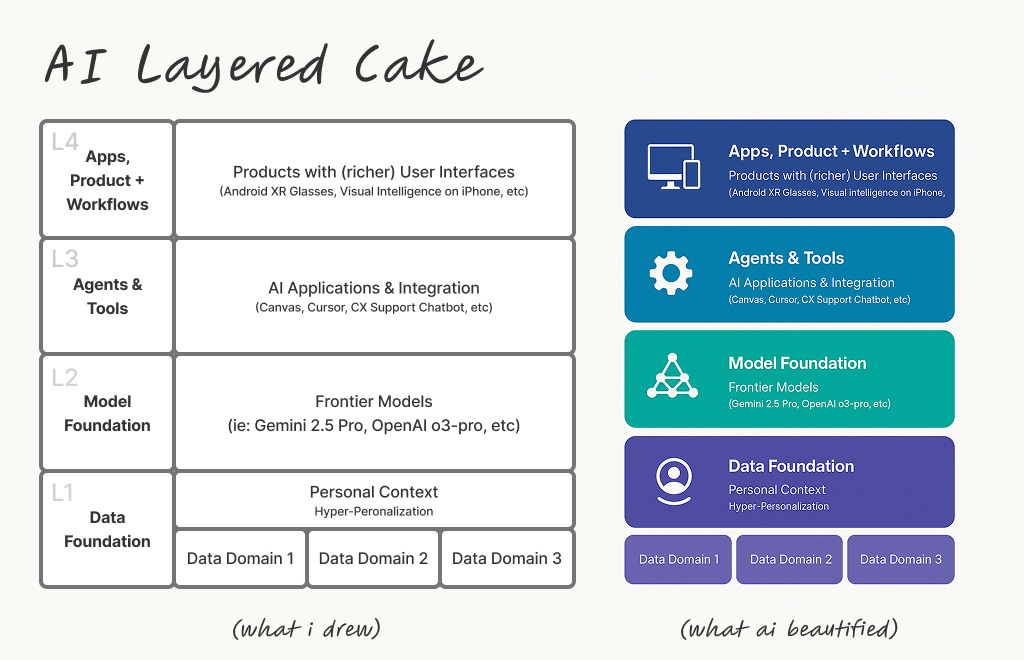

My Read on What ACTUALLY Released at Google I/O: The AI Layered Cake

So, Google I/O (or should I say, Gemini I/O?) made it clear: AI is now at the core of everything. And it's a layered cake - here's a quick recap (your cliffs note bookmarks) to all they have announced in the keynote, and my read on their deeply layered AI experience.

1. Google I/O Release Recap in a Layered Cake

Layer 1 - Data, and More Personalized Data for Added Context

Personal Context - Google's Hyper-personalization foundation - allows you to connect your information across google apps. With grounded context personalized to you, the generated content are more relevant and useful. For example, AI gets way more context by tapping into your data (like Gmail integration) to truly understand and assist you. That said, this might sound like a privacy trap depends on where you stand on sharing your data.

Layer 2- Better Frontier Models

AI generating video, music, and even more advanced multimodal models.

Native Audio Output - natural and expressive speech generation that always live language switching with the same voice.

Gemini Diffusion - a new text-generating AI model, which performs much faster than the transform based models. You've seen how Stable Diffusion or Midjourney works right? Think that, but for text, and it runs much much faster. So fast that Google had to slow down the video in the demo. These models output the content, remove noises and iterates on top of it, instead of spitting out one token at a time till EOS like the transformers do. That said, they're not common as the quality is not as good as transformers.

Gemini Deep Think - This is Google's version of OpenAI’s "pro" models which think deeply (no pun intended) by analyze prompt first and synthesize the results. As fancy as it, it's likely limited to power hungry use cases such as research. Let's hope Google doesn't pull a GPT-4.5 on us.

World Models - generative AI models, particularly designed for creating realistic simulations and understanding the world through models. Google also claims, that they "can make plans and imagine new experiences by understanding and simulating aspects of the world, just as the brain does".

Imagen 4 - image generation models, and the 10x faster. The text generation within the image is spot-on and with styles, see this post's cover image.

Veo 3 - lets you add sound effects, ambient noise, and even dialogue to your creations – generating all audio natively.

Lyria 2 - generate new instrumental music tracks from a text prompt.

Flow - AI filmmaking tool custom-designed for Google's models, similar to OpenAI's Sora, you can do scene by scene, frame by frame video generations.

Layer 3 - Agent and Tool Use

Big focus on "tool use" so Gemini can actually complete tasks (ie: Google Tasks). This is where the app ecosystem will boom, though that last-mile integration is key.

Gemini SDK with Native MCP Support - Google Chrome, Search, and Gemini app will also again agentic capabilities.

Agent Mode (vs AI mode) - Agentic tools and/or digital labor as Marc Benioff called out earlier in the year. Well, this is Google's attempt on this - by offering beyond just AI mode with Agent mode. Google is adding "Agent Mode" to the Gemini app, allowing users to delegate complex tasks to the AI, like finding an apartment. Of course, all of these agentic tasks requires the hyper personalization we talked about above.

Better Canvas Support - This is one of the first tool that I tested with other LLM providers too. Claude Sonnet 3.7 (though Claude Sonnet 4 just dropped as I'm writing this post)was by far the best LLM at tool-use at the time. The new Gemini Canvas now adds file uploads and transformation.

Project Mariner - Project Mariner is a research prototype exploring the future of human-agent interaction, starting with browsers. Project Mariner is still in a very early stage, but the potential there is huge!

Layer 4- Richer Products and User Interfaces

Okay, now, why do you think Apple added a camera button on iPhone 16s? More direct interface for AI and AI features.

Same deal there, expect Gemini everywhere – Search, Chrome, Lens – with multimodal inputs (talk, type, point!).

Google Beam (Project Starline) - 3D video multicam conferencing system that aims to create a more natural and immersive remote communication experience. Plus they added realtime translation for Google Meet as well.

Gemini Live (Project Astra) - a feature within the Gemini mobile app that enables you to have natural, free-flowing conversations with Gemini. Now it added support camera and screensharing.

Android XR Glasses - Google Glass gen 2? Early stages, but with Gemini integrated, yet another way to interact with Google's AI tools. Looks better than Meta Ray-Ban Glasses.

Finally, the introduction of the Google AI Ultra plan and the continued focus on cheaper, faster APIs (if you're a gemini API user, they still offer by far the cheaper cost per token and better quality in general) clearly signals Google's strategy for both monetization through premium offerings and driving broad adoption through accessible developer tools.

2. My Strategic Take: Build to Your Strengths, Don't Boil the Ocean

Seeing this "layered cake" laid out by a giant like Google for Developers reinforces a crucial strategic point for everyone else in the ecosystem: don't try to reinvent commodity wheels; build what's unique to you and leverage the rest.

Here's how I think about applying this to the layers:

Layer 1 (Data): This is Your Goldmine

My advice here is simple: focus 80% of your unique effort on your data. This is the layer no one else can replicate for your specific context. High-quality, proprietary, well-structured data, well interpreted and labeled (tagging Data Advocacy/Stewardship expert Pamela Zirpoli) is your biggest differentiator. The better the data you provide to any model (whether Google's or another), the better, more personalized, and more valuable your results will be. This is where your unique insights and customer understanding truly shine.

Layer 2 (Frontier Models): A Tiered Approach is Smart

For most of us, training a foundational "dragon" (a massive LLM or SLM) from scratch is a monumental task with questionable ROI, given the deep pockets required. Instead, a pragmatic, tiered strategy makes more sense:

Use Out-of-the-Box (OOTB) Frontier Models: Start here whenever possible. Leverage Google's (or other providers') powerful pre-trained models. Prompt engineering is your best friend for getting great results quickly.

RAG/CAG/Hybrid Generation: For more tailored outputs, ground the model's generation with your specific data using RAG or CAG techniques. This gives you more control, ensures factual grounding, and is relatively lower cost than full fine-tuning.

Strategic Fine-Tuning: For very specific use cases where OOTB models plus RAG aren't enough, consider fine-tuning. But remember, this requires significant effort in preparing high-quality fine-tuning data, compute resources for training, and potentially higher costs at inference.

Train Your Own (Smaller) Model? Maybe, but Tread Carefully: Only consider training your own small/specialized language model (SLM) or even a large one (LLM) if you have an extremely compelling, unique dataset and a clear path to ROI that justifies the massive investment. Often, that last 20% of performance can be achieved more efficiently through the first three tiers.

Layer 3 (Tool-Use and Agents): Focus on Value-Driven Workflows.

When it comes to building agents and enabling tool use, the landscape is rich with open-source components and available services. My philosophy here is to leverage these existing building blocks whenever possible. Only build from scratch if there's a truly unique, compelling need that off-the-shelf solutions can't address. If anything, use what others build, and learn from it as your build in house to your advantage.

The real differentiator in this layer isn't necessarily the novelty of the individual tools, but the effectiveness and value of the workflows you orchestrate. Focus on designing agentic systems that solve real user problems or create significant efficiencies.

Crucially, ensure you have a solid feedback loop integrated into these workflows. This allows the system (and your team) to learn, improve, and drive increasingly higher value over time.

Layer 4 (Products and User Interface): Iterate Relentlessly on Customer Needs.

For the user interface – how people actually experience and interact with your AI-powered product – the approach should be deeply customer-centric and agile. Build to your customer's needs, not just to showcase technology.

Embrace the principles of Lean Startup: build, measure, learn. Release value in small, manageable batches. Gather user feedback early and often. Be prepared to iterate quickly based on what you learn. This iterative cycle is key to ensuring your interface is intuitive, effective, and genuinely enhances the user's ability to benefit from the underlying AI capabilities.

3. Final Thoughts

The Reality of "The Last Mile"

One thing that remains true, even with these incredible advancements, is the challenge of "the last mile." Whether you're "vibecoding" with an AI assistant like Cursor, building a sophisticated product, designing agentic workflows, or simply using an AI app as an end-user, it's highly likely you'll still need to refine, edit, or guide the AI's output for that final 20%.

LLMs are not yet perfect, especially when it comes to nuanced, high-stakes, or highly specific tasks. But that's okay! My take is to embrace the 80% win. Let AI do the heavy lifting, get you most of the way there incredibly fast, and then apply your human expertise, creativity, and critical thinking to polish that final 20%. Trying to force AI to achieve 100% perfection on its own can often be far more time-consuming and frustrating than this collaborative approach.

Keep Pushing the Envelope – The Pace Isn't Slowing Down!

The sheer volume of what Google released is a testament to the incredible pace of innovation in AI. It's genuinely amazing! And if history is any guide, the improvements are only going to get faster and more significant.

So, if your current app or product is "just getting by" with its existing LLM integration, don't get complacent. Keep pushing the envelope. The next generation of models will likely make your product significantly better, smarter, and more capable. And the beauty of it? Often, the work required on your side to upgrade can be as simple as changing an LLM model name in your configuration. The barrier to accessing these cutting-edge improvements is often lower than we think.

These are exciting times, and the "layered cake" is getting more sophisticated by the day. It's a lot to digest, but also a huge field of opportunity for those ready to build thoughtfully.

What are your big takeaways from Google I/O, or your thoughts on this "layered" future of AI?

#GoogleIO #AIStrategy