Apple's Unfair Advantage in AI - My Biased Read

So, the dust from Apple's WWDC is settling (though for some, the debate is just getting started!). The initial hot takes from the tech world and Wall Street are - a collective "meh". I saw a lot of chatter, from the supposedly "subdued" feature list to that once-a-decade liquid glass redesign (which I yet to get thoughts from the UI/UX experts like Melanie Lantin to weigh in on that one!). The prevailing sentiment seems to be that Apple is still playing catch-up in the great AI race.

Well, here's my take - I think that view is missing the forest for the trees entirely. Apple isn't that behind; they're running a completely different marathon. It’s a long game, and it’s built on the one asset they have that no one else does at their scale: deep, foundational customer trust.

A recent, very candid interview with Apple's Craig Federighi & Greg Joswiak by The Wall Street Journal gave us a rare peek behind the curtain that perfectly explains their entire strategy. Federighi admitted that for Siri, they had "real working software" with large language models ready to go. It wasn't just "demoware." So why didn't we get the mind-blowing, revolutionary Siri everyone expected?

In his own words, it simply didn't meet their quality bar for reliability. For a tool as personal and open-ended as an AI assistant, he said, it needs to be "really, really reliable." Shipping a v1 with an error rate that would disappoint users and erode trust just wasn't an option. So they made the tough, disciplined call to pivot to a "v2" architecture to get it right.

That single decision is the perfect lens through which to view everything Apple just announced.

Part I: Building for the Customer, Not the Hype Cycle

Apple’s AI - or "Apple Intelligence" as they've carefully branded it - is a masterclass in playing the long game. It’s methodical, deliberate, and yeah, maybe a little less flashy than the competition. But, maybe, just maybe - it’s all by design.

1. A Higher Bar for Quality and Trust

You probably still remember in 2008 that Steve Jobs pulled the first Apple Macbook Air out of an inner office envelop - a huge moment for Apple as called out by Tim Cook. Well, did you know, at that very moment, Apple was hand-coding static HTML pages for it's product launch site in 20+ different language to make sure they all meet the quality bar?

This is just in Apple's DNA, and Craig's admission proves it's not just marketing speak. They are rarely the first to a new technology, but when they do arrive, they often strive to be the best and most thoughtfully integrated. Think about it: the iPhone, the iPad, the Apple Watch – none were the first of their kind, but they arguably defined their categories by waiting until the technology and user experience met their exacting standards.

This time is no different. We saw practical, genuinely helpful features announced, like Live Translations seamlessly integrated right into the core OS. This isn't some standalone app you have to download; it's a feature that works right where you need it, powered entirely by on-device intelligence. Your private conversations remain just that - private. This deliberate, privacy-first integration is the hallmark of their approach. They are more than willing to delay a full-blown "sentient Siri" to ensure that what they do release is secure, polished, and genuinely useful, thereby reinforcing the customer trust they've spent decades building.

2. Privacy-First - Hyper-Personalization Built on Trust

This, right here, is Apple’s key differentiator in the AI, and where their long game on privacy becomes their "unfair advantage".

Here's the thing - the holy grail of AI isn't just a smarter chatbot that can get your trivia questions right. The ultimate winner in the AI race will be the one that provides the most deeply integrated, contextually aware, hyper-personalized assistance - without making you feel like your entire life is being mined for data. It’s an AI that truly knows you and can proactively make your life easier, precisely because you trust it enough to let it.

But what does that require? It requires access to the very core of your digital life: your emails, your messages, your photos, your calendar, your location, your contacts. An AI can't proactively tell you when to leave for the airport without seeing your flight details, checking your calendar for pre-flight meetings, and looking at live traffic. It can't summarize a project update without access to your emails and messages.

This deep data access is the biggest barrier for most companies because it demands an astronomical level of user trust. And this is where Apple's long-term strategy, their "Trust Moat," comes into focus. As one Forrester study highlighted by Martin Gill and Enza Iannopollo, consumers often trust Apple with their personal data more than they trust their own government!

Now, if you commute to San Francisco like me, or to any big cities around the world for that matter, you're no stranger with this "Privacy. That's iPhone" billboard.

Apple has been meticulously laying the groundwork for this for years, and it's all coming together now:

Building a Walled Garden of Privacy: Remember when Apple rolled out App Tracking Transparency (ATT)? It created an economic shockwave for companies that rely on tracking users. A Federal Trade Commission working paper detailed how this move led to a ~21% fall in ad revenue for publishers from Apple users. It was a clear, long-term play that prioritized user trust over short-term partner profits, and it conditioned users to believe that Apple has their back on privacy.

On-Device as the Default: Did you know Apple News app started with personalized news on-device? Your iPhone is already a personalization powerhouse because so much of the computation happens locally on the Neural Engine. Features like Face ID, searching for "beach" in your Photos library, and many Siri requests are handled entirely on your device. Your data isn't being shipped off to some server to train a model that benefits others; it stays with you. That's how Apple checks another box on privacy and trust.

Private Cloud Compute: This is the brilliant "have your cake and eat it too" solution. For AI tasks too complex for your device, Apple created PCC. As detailed in their technical documentation, it uses Apple silicon-based servers to process your data in a way that is stateless (your data is never stored), inaccessible (even to Apple employees), and verifiable (the software is public for security researchers to inspect). Your iPhone won't even send data to a PCC server unless it can cryptographically verify it's running this publicly audited software. This is a fortress built to earn the trust required for true personalization.

3. Build on Your Strengths, Partner for the Rest

Apple knows what it's good at: world-class hardware, seamless software integration, and unparalleled user experience. They also know when it makes sense to partner for a technology that has become a powerful commodity.

Understanding AI's Limits: It's no coincidence that just before WWDC, Apple researchers published a paper highlighting how even advanced Large Reasoning Models (LRMs) can fail when problems reach a certain complexity. As debatable as it can be a saving grace, this shows Apple has a deep, sober understanding of the current tech's limitations and refuses to over-hype capabilities they can't yet deliver on reliably.

The Pragmatic ChatGPT Integration: Instead of trying to build a "better-than-everyone" foundational model for world knowledge from scratch (a massive undertaking), they’ve partnered with the current leader, OpenAI. But notice how they did it: Siri will ask for your permission every single time it needs to consult ChatGPT. Your data isn't automatically shared, and OpenAI is contractually obligated not to store requests. It's a smart, pragmatic move that gives users more power without compromising Apple's core privacy promise.

The Google Search Deal Precedent: This isn't a new strategy for them. Apple doesn't have its own global search engine. Instead, they have a deal where Google pays them an estimated $20 billion a year to be the default. It’s a win-win. Google gets massive, high-value traffic, and Apple provides a seamless search experience without needing to build and maintain that global infrastructure. They are applying the exact same logic to generative AI.

Part II: Empowering Developers to Build the Future

An AI assistant is only as good as the ecosystem of apps and services it can connect to. Apple knows this, and they made some significant moves to empower developers that I think are being overlooked.

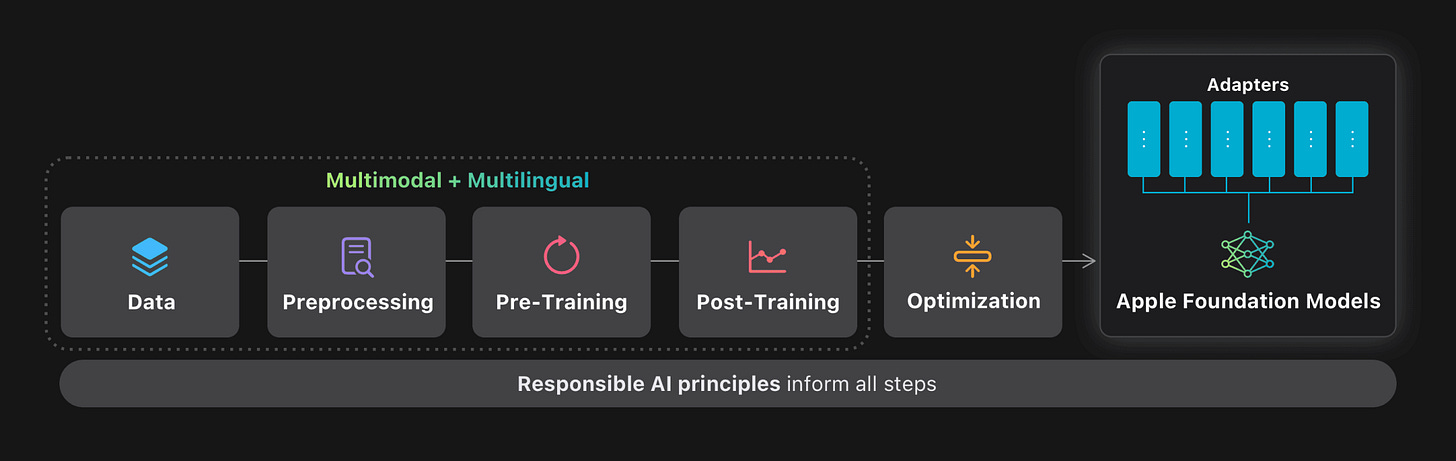

On-Device Foundation + on Private Cloud Compute Models: For the first time, developers get direct access to Apple’s powerful on-device language models via the FoundationModels framework. This is a huge deal. It allows developers to build sophisticated, privacy-preserving AI features right into their apps that work even when you're offline, without racking up expensive cloud API costs.

Supercharged App Intents: But more importantly, they’ve supercharged App Intents. This framework is the quiet giant here – it’s the real key that will unlock Siri's true potential down the road. It allows developers to make their app's specific actions and content discoverable and actionable by the entire system. By encouraging developers to adopt this now, Apple is patiently building the nervous system for a much smarter Siri. When Siri does get its big "v2" upgrade, it will already have a rich, deep ecosystem of third-party app capabilities to tap into from day one.

AI-Powered Xcode for Developer Productivity: Apple is giving developers a serious productivity boost right in Xcode. With integrated LLM support, including from partners like OpenAI, developers can get help with things like predictive code completion, generating tests, and even debugging and fixing common errors. It's a pragmatic way to make the entire process of building apps smarter and faster. That said, I haven't coded in Xcode for a long time, so I'll leave the Xcode vs Cursor conversation for later.

So, When Do We Get the AI from "Her"?

Look, everyone wants that all-knowing, all-powerful AI friend from the movies. But the truth is, building a real-life "Her" is less about a single, magical chatbot and more about laying the right foundation first.

What Apple showed us at WWDC wasn't a finished, flashy temple (the temple that was promised from the last WWDC a year ago); it was the meticulous, painstaking, and maybe even a little "boring" work of laying a foundation of granite. A foundation of on-device intelligence(or via PCC), verifiable privacy, and deep developer integration.

The Siri that can seamlessly book a reservation for you, pull up that photo from your beach trip last summer, and summarize a chaotic group chat is coming. And when it arrives (yes, from a fanboy's perspective), it won't be a fragile house of cards built on scraped data and "best effort" reliability. It will be a fortress built on a bedrock (no pun intended) of trust - a product that customers will actually want to use deeply and that developers will be excited and empowered to support.

That’s Apple’s AI playbook. It’s not about winning the next news cycle; it’s about winning the next decade of customer trust and love, plus/minus some personal computing.

What's your take? Is Apple's patient, privacy-first approach the right one for the long term, or are they risking falling too far behind in the short term? I'd love to hear your thoughts!

#ApplesUnfairAdvantageinAI #AppleIntelligence #WWDC25 #AIStrategy #Privacy #CustomerTrust #TechPOV #Apple #Developer #Siri